Static Site Generation is NextJS' Killer Feature

January 31, 2022

I've found static HTML export to be an extremely powerful feature of NextJS, one that I keep coming back to (you are reading an SSG page from NextJS right now).

The SEO benefits of static site generation (SSG) are well documented, and it's hard to beat the page load performance of a website that just serves up HTML, CSS, and tiny bundles of Javascript.

What appeals to me most about SSG is:

- It allows a website to pre-render all of it's content once per build rather than once per request. Thus any code that runs during a typical user visit is focused solely on providing the interactivity that is required for features on the page to work. Not on rendering the page itself.

- It allows for extreme page performance and dirt-cheap hosting, especially when used in combination with a CDN like AWS CloudFront.

- It provides a very simple mental model: what you see locally in your

buildfolder is exactly what you serve users when they visit your website. No abstract "serverless" functions or complex node servers that only work on Vercel. To deploy, just move your build folder online.

Also if you're willing to run a full server rather than a CDN solution, you can take advantage of Incremental Static Regeneration.

When you combine these features it leads to some really interesting possibilities, which we'll talk about in the next 2 sections.

NextJS is almost like two different projects in one.

First, it's a way to forego the traditional client-server architecture to bootstrap products quickly.

And second it's a way to generate static sites from data.

For this I give NextJS credit. It would be difficult to do either one of these things, let alone both. But I don't think that the SSG side of the project gets enough attention. I can't help but think the reason is partially because Vercel is incentivized to funnel users to their own platform in order to make a profit, and SSG does not require any special deployment environment to thrive[1].

That's not to say that SSG is underutilized. In fact it's quite the opposite.

I believe that the downward trend in search engine quality is partially due to the proliferation of data-driven SSG, exactly the type of stuff that NextJS helps enable. When I say that SSG is NextJS' killer feature I mean it literally. SSG is a death knell for search results because it makes it so simple to anticipate a query and put a low-quality page out in front of it at next to $0 cost. I'll make the case for that soon, but first let's make sure we cover the SSG basics to understand how it works.

Designing data-driven websites

To illustrate SSG, let's consider a simple example of a "data-driven" website.

Say you want to make a website that showcases the top 1,000 Spotify artists. For a given artist, you'll display their top songs, number of streams, genres, and related artists.

To make this work, you'll need to connect to the Spotify Web API, where you can pull all of this data and more.

Here are 3 architectures that could be used to build this app:

Client Side Render via SPA

With a SPA, you would set up client-side routing, and make client-side requests to fetch data from the Spotify API (or likely from a proxy server, rather than the API directly, so you can obscure your API key and arrange the data in a more useful way before sending it to the client).

Advantages

- Will always fetch the latest data from the Spotify Web API

- Simple client-side setup with single

index.htmlfile - Separation of concerns means that backend can run anywhere, in any language

Disadvantages

- 2x the deployments and maintenance (assuming client + server are separate)

- Responsible for own server and security of it

- SEO performance suffers since data is not present in the initial page load

- Initial page load is not optimal compared with static sites due to large bundle size

NextJS with SSR

If you don't feel like rolling your own server, you can use NextJS to fetch data server-side for you. It really is quite simple: you define a function called getServerSideProps on the page and it will auto-magically run in a node environment for you. You can hide your API keys in these functions as well.

Advantages

- Will always fetch the latest data from the Spotify Web API

- No need to deploy and maintain a separate server environment

- NextJS abstracts the server side code, making it feel like part of your React app

Disadvantages

- Page HTML is generated on each request, resulting in slower performance

- SEO performance suffers since data is not present in the initial page load

- Isomorphic JS leads to headaches

NextJS with SSG

If you know what data you want to display ahead of time, SSG is your best friend. With this architecture, you write a function in Node that runs at build time and pre-calculates all of the paths that you would like to render in the website. For each of these paths, you can pre-fetch data and bake it directly into the HTML itself.

Advantages

- Best SEO performance because data is well structured in static pages

- Fastest load times because pages are pre-rendered and can be cached by a CDN

- Extremely easy + cheap to deploy because there is no server-side code

Disadvantages

- Page data does not update unless the page is re-built

- Advanced use cases like ISR require the introduction of a server

- Requires that you define the content ahead of time

If you are a publisher or run a content-driven site, the SEO benefits alone make SSG worthwhile. If you are a hobbyist then the simplicity is appealing. If you are frugal then the cost can't be beat. Overall I just think it's a really elegant approach.

That said, like many other pure and good and open source technologies, this one has been co-opted by publishers who seem intent on hijacking the internet for clicks.

The dark side of SSG

One of the first examples I can remember of a company using realtime analytics to generate content at scale was when Buzzfeed began A/B testing headlines and updating them in realtime to converge on the one that got the most traction.

Since then, this practice has gone mainstream.

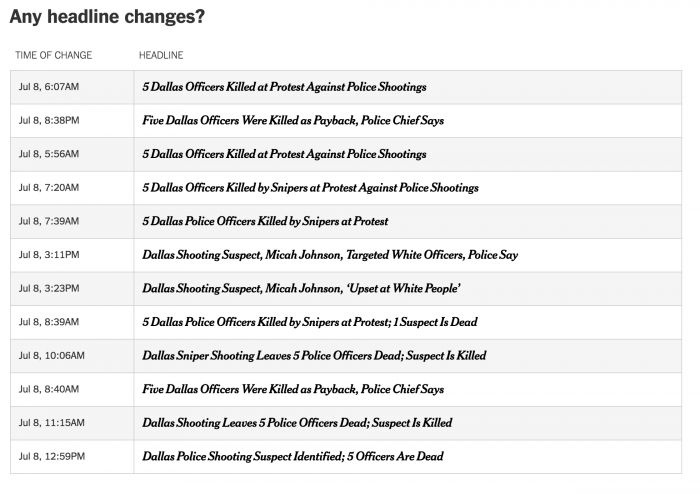

When a police officer was shot in Dallas in 2016, The New York Times changed the story’s headline more than 10 times in less than 6 hours time.

Courtesy of niemanlab.org

Each change was informed by traffic shifts and reader response across social channels, which NYT editors monitor closely using their in house analytics dashboard called Stela. Though editors certainly have a role to play in writing headlines, the driving force and final say is now behavioral data.

This isn't SSG per se, but it demonstrates how behavioral data is intrinsically linked to content at major publications. The newspapers are trying to fill a niche that our attention creates. Just like other publications with their statically generated pages.

We're at the point now where this type of technology is both mainstream and commodified. A publisher only needs a source of data that is a decent proxy for what people are looking at or searching for. From there, creating web content at scale to reflect that behavior is easier than ever with tools like NextJS and SSG [2].

Skins and Shims

There are 2 main categories of websites that I notice engaging in data-driven publishing in order to game search results via SSG. Let's call them "skins" and "shims".

1. Skins

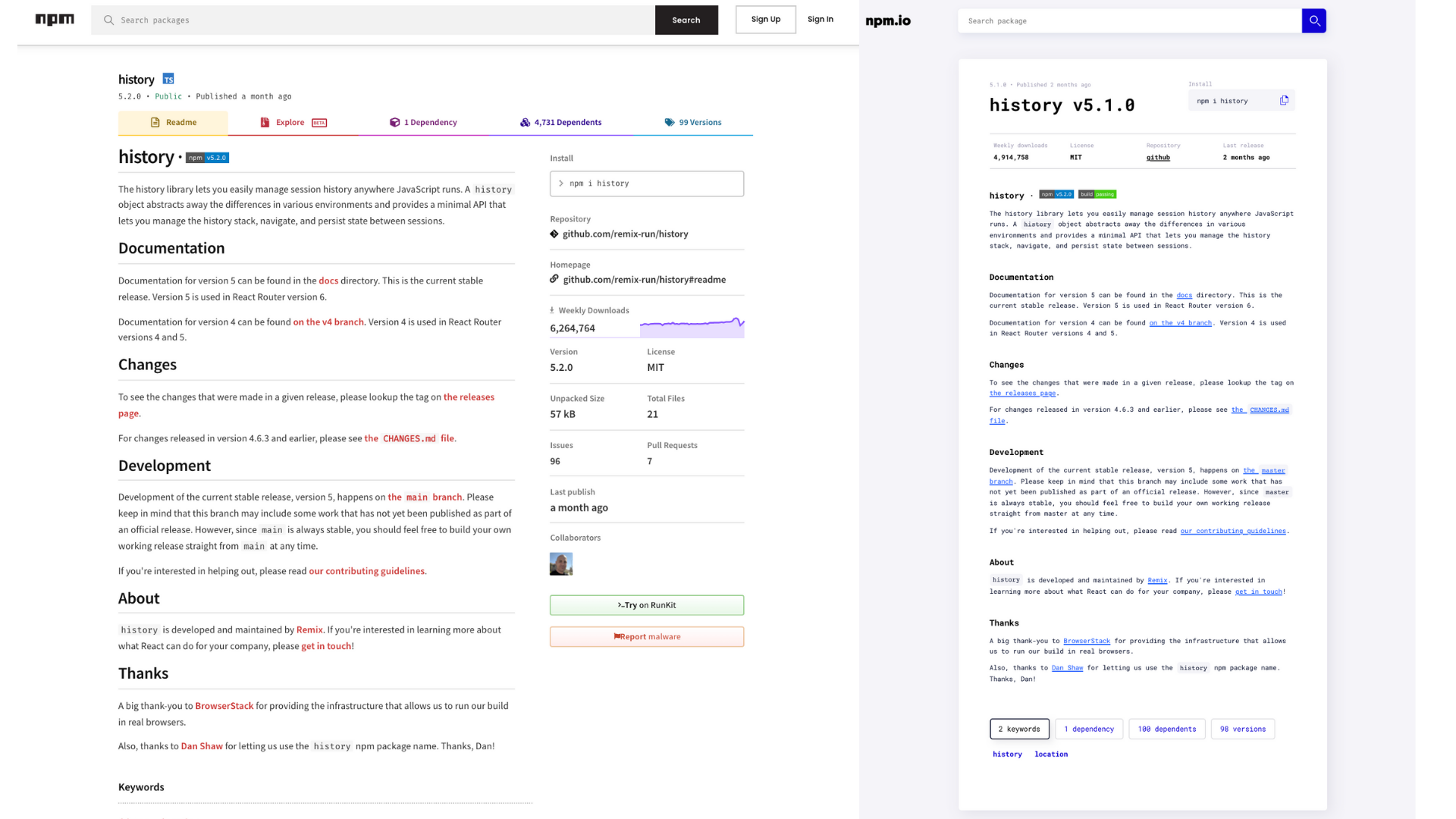

There's a site called npm.io that makes pages that are exact copies of content on npmjs.org. Since all of the content on npm is publicly available, it makes for an easy target.

npm.io is an example of a site that jumped to #2 in the search rankings simply by making an exact copy of #1.

The fact that npm.io is ~1 month behind npm.js is a sign that it uses SSG, otherwise the page would be up-to-date. From the perspective of the npm.io author, the

historylibrary probably isn't popular enough to warrant <1 month re-builds.

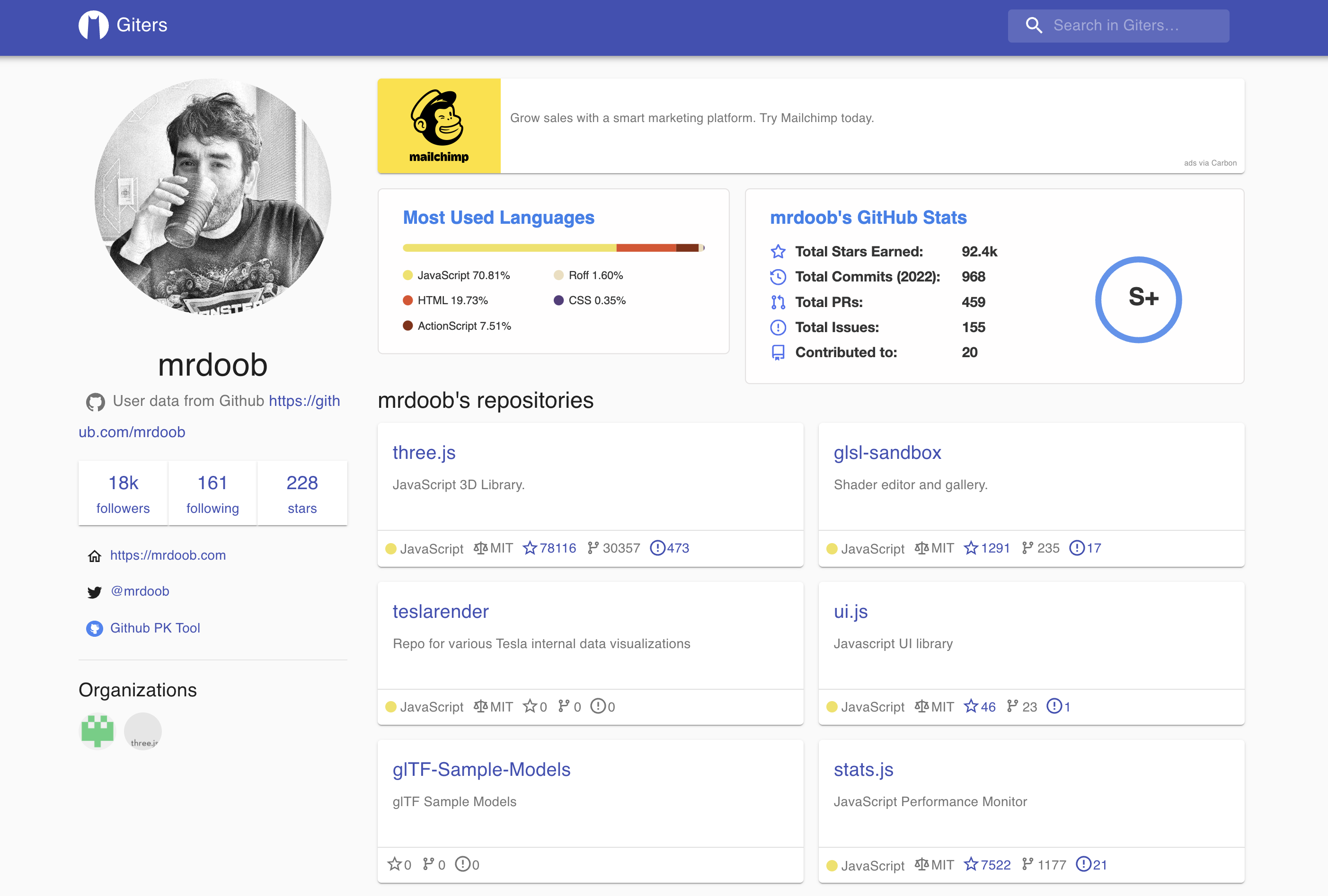

There's another one called giters.com that (poorly) skins Github profiles and slaps an ad at the top of each page:

2. Shims

I call these sites "shims" because they generate the bare minimum static content required to get listed on Google, usually for obscure or long-tail queries. Typically the content itself is just the original search query, and maybe some related search queries or a subset of results from a database that the publisher maintains.

yellowbook.com is a good example of this. The expectation with a site like this is that queries will have a long-tail distribution since there are so many unique names. So naturally, the publisher strives to generate a static page for every name that has ever been queried in order to get yellowbook.com ranked on the first page of Google. Incidentally, this is a great use case for Incremental Static Regeneration. Any time a unique query is made for the first time, a single new index.html is added to a bucket behind a CDN, somewhere.

I checked out the yellowbook.com page that can be found on Google when you search for my name, and sure enough, the search results are baked right into the HTML. And yes it loads fast.

You can find "shims" all over Google when you start searching for really specific stuff. As long as at least one other person has searched for it in the past, it's likely that some publisher has generated a shim for it [3].

Looking Ahead

Proper SSG arrived in NextJS 9.3, less that 2 years ago as of this writing, so it's too early to say what impact it will have on the web at large, though I think it's safe to say that the proliferation of data-driven SSG will continue.

On one hand, it's great for small publishers. And for whoever made giters.com over a long holiday weekend.

On the other hand, it's not great for search engine users, assuming that this statically generated content has a low signal-to-noise ratio (which is true from my experience).

That said, if this content ranks well on Google, it must be there for a reason, right? Search results are clearly meant to match search behaviors, and I'm sure Google and others are doing a great job of this.

I'm still trying to figure out what I think about all this, and it's not clear to me what changes - if any - to search engines would be helpful.

They could make tweaks to penalize sites with a high degree of overlap with primary sources, but what implications for search-at-large would that have?

They could increase personalization, such that Google learns that I don't ever want to click on npm.io and de-lists it for my account, but what implications for privacy (and filter bubbles) would that have?

What I do know is that SSG unlocks a lot of possibilities for publishers. It will be interesting to see how search engines react.

[1] In fairness to NextJS, they state in their docs "We recommend using Static Generation (with and without data) whenever possible because your page can be built once and served by CDN, which makes it much faster than having a server render the page on every request."

[2] SSG is not a new concept, and there are many other tools out there. I'm focusing on NextJS just because it's the only SSG framework I've used, and it seems to be the most popular. See https://jamstack.org/generators/ for others.

[3] I have come across many sites like this over the years but am currently blanking on additional specific examples. If you have any, let me know and I will add them to this section.